Blog

Multi-Agent Testing: How AI Agents Solve the Context Problem

Multi-agent testing is emerging as a transformative approach in artificial intelligence (AI) and software quality assurance, addressing one of the sector’s biggest hurdles, that is context. AI models excel at pattern recognition and automation, but they often fail when it comes to understanding user intent or adapting to dynamic environments.

By leveraging multiple AI agents that collaborate, critique, and share knowledge, multi-agent testing ensures results that are reliable, scalable, and aligned with real-world complexity.

The Context Problem in AI

AI systems are only as good as their ability to interpret situations correctly. When context is missing, even the most advanced models can misfire producing outputs that are technically correct but practically useless.

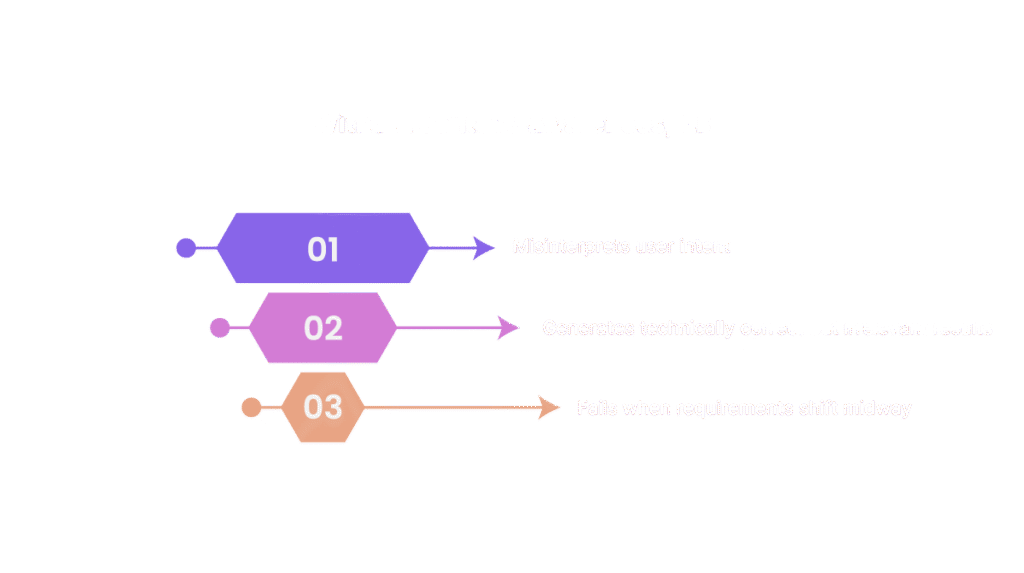

Without context-awareness, AI can:

- Misinterpret user intent

- Generate technically correct but irrelevant results

- Fail when requirements shift midway

Example: In software testing, one AI agent may verify that a button works but miss how its behavior should change for different user roles or permissions. This gap highlights why single-agent systems fall short.

What is Multi-Agent Testing?

Multi-agent testing brings together multiple AI agents in a coordinated system to test, analyze, and validate solutions. Each agent plays a specialized role such as compliance auditor, functionality checker, or user-experience evaluator and together they mimic the diverse strengths of a human testing team.

Unlike traditional single-agent AI, which can get trapped in narrow interpretations, multi-agent systems collaborate, cross-check reasoning, and compensate for blind spots. This teamwork makes them uniquely effective in solving the context problem.

How Multi-Agent Systems Solve the Context Challenge

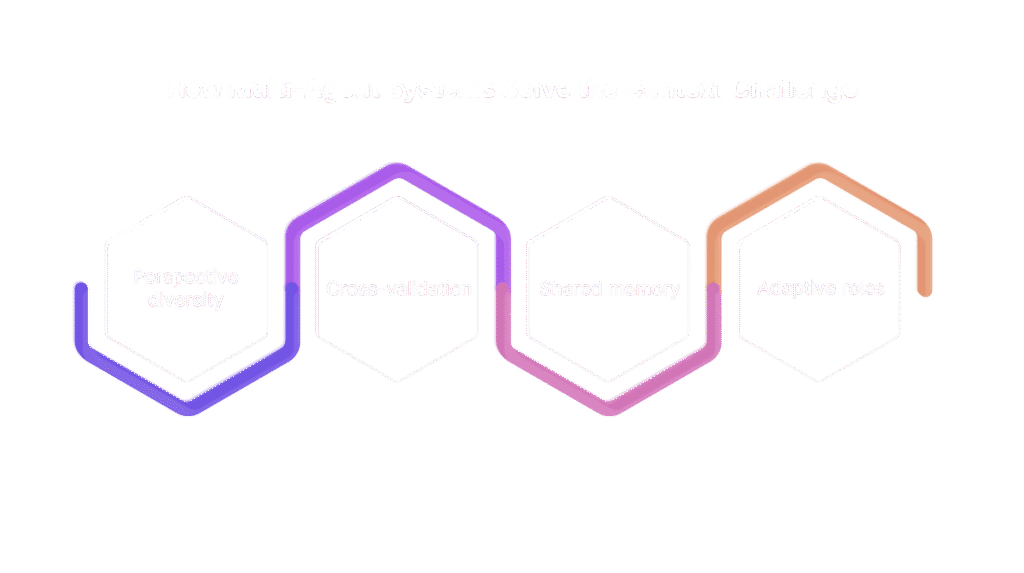

Multi-agent testing overcomes blind spots through collaboration and orchestration:

- Perspective diversity: Agents evaluate situations from technical, functional, and user-centric angles.

- Cross-validation: Agents review each other’s findings, reducing false positives and missed issues.

- Shared memory: Agents build a collective knowledge base that retains context across tasks.

- Adaptive roles: The orchestrator reallocates agent responsibilities (test generation, execution, triage) in response to policy triggers and runtime signals.

Example: Testing a banking application might involve one agent checking transaction consistency, another validating compliance with financial regulations, and a third evaluating usability for customers. Together, they deliver a result that is accurate, compliant, and user-friendly, something a single agent would be unlikely to achieve.

Benefits of Multi-Agent Testing

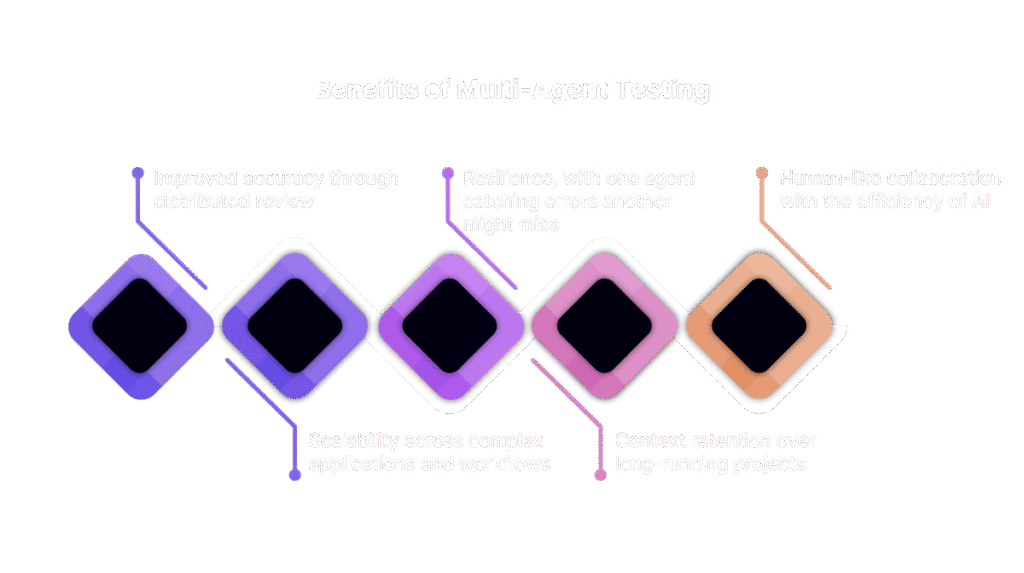

Adopting multi-agent systems offers clear advantages:

- Improved accuracy through distributed review

- Scalability across complex applications and workflows

- Resilience, with one agent catching errors another might miss

- Context retention over long-running projects

- Human-like collaboration with the efficiency of AI

Multi-Agent Testing in Industry Applications

Beyond software validation, multi-agent systems play a role across multiple industries:

- Healthcare: Agents cross-verify diagnoses, treatment recommendations, and patient data privacy.

- Finance: Compliance-focused agents ensure regulatory adherence while others validate transaction integrity.

- Autonomous Systems: Testing navigation, safety, and decision-making simultaneously through specialized agents.

- Enterprise Software: Stress-testing scalability, security, and user experience in parallel.

By combining specialized perspectives, these systems ensure that industry needs – accuracy, compliance, safety, and usability are met without compromise.

Challenges and Considerations

While multi-agent testing unlocks new possibilities, organizations must address key challenges to realize its full value:

- Coordination Overhead

Orchestrating multiple agents effectively is complex. Without clear design, efforts risk duplication or misalignment. - Data Privacy in Shared Systems

Shared memory enhances context but raises concerns around protecting sensitive data and meeting compliance requirements. - Rising System Complexity

The more agents involved, the more intricate the interactions, roles, and workflows become, driving up setup and maintenance costs if not managed carefully. - Lack of Standardization

The absence of common frameworks for interoperability makes adoption and scaling harder across industries.

Recognizing and addressing these hurdles is essential for operationalizing multi-agent solutions successfully.

The Future of Multi-Agent Testing

Industries like finance, healthcare, autonomous vehicles, and enterprise software are demanding more context-aware testing than ever. In such domain, missing context isn’t just costly, it’s unacceptable.

Multi-agent testing signals a shift from isolated AI systems to collaborative intelligence ecosystems that can test, design, deploy, and maintain applications while preserving context continuously. This evolution sets the stage for AI systems that are not only powerful but also reliable, adaptive, and trustworthy.

Final Thoughts

The emergence of multi-agent testing represents a turning point in AI and software quality assurance. By solving the context challenge, organizations can build systems that are functional, resilient, and compliant, paving the way for next-generation digital transformation.

With Zyrix Test Autopilot, these possibilities move from concept to practice, enabling enterprises to test smarter, faster, and with true contextual awareness.

Ready to experience it? Book a Demo